Message from the workshop organisers:

Dear participants,

Thank you for participating in this Learning Analytics (LA) Short Course.

To take full advantage of this opportunity, we recommend the following process.

1. The session objectives, pre-reading, and pre-workshop reflection exercise is outlined in the course blog, which has been emailed out to you one week before.

https://datainformedelearning.blogspot.com/2018/04/learning-analytics-short-course.html

Please allocate one hour to the pre-session reflection exercise, using the resources of the course blog as a content buffet, to start your thinking process.

Please make sure you make a record of your pre-session reflections, which we request that each participant email back to the organisers by 8am Monday, June 11, 2018, one day before the session.

2. During the interactive group activity on Tuesday, 11 June, we will work on your reflection exercise individually, and as a group, before sharing this amongst the participants.

3. The organisers envisage that this process will form the foundation for the next stage in the planning and implementation of LA use at NUHS.

__________________

Session Objectives

1. The definition of learning analytics2. Types of learning analytics and their purpose in education

3. Tools in supporting learning analytics

4. How data-driven decision making differ from traditional decision making and the potential future implications of this transition.

5. How to draw/determine real time and predictive data about students and trainees data

PRE-SESSION ACTIVITY

1. Read the following articles

Goh, P.S. Learning Analytics in Medical Education. MedEdPublish. 2017 Apr; 6(2), Paper No:5. Epub 2017 Apr 4. https://doi.org/10.15694/mep.2017.000067 and SlideShare presentation by Rebecca Ferguson

(Podcast)

https://cloudplatform.googleblog.com/2013/11/kaplan-builds-online-education-platform-kapx-with-google-app-engine.html

https://edu.google.com/latest-news/stories/strayer-university-gcp/?modal_active=none

https://www.cio.com/article/3153389/it-industry/how-small-data-became-bigger-than-big-data.html

https://www.mckinsey.com/business-functions/operations/our-insights/the-ceo-guide-to-customer-experience

2. Prepare the following, BEFORE the workshop.

Reflect on your roles in medical education and clinical training. Make notes on the following, which we will add to during the "live" session.

What data do you currently have about students and trainees?

What data do you feel we should collect?

What analysis are we currently doing with this data?

What could we be doing?

How can data, and analysis (of this data) improve our education and training efforts?

How could we do this?

Session takeaways:

7 byte-size ideas from Poh-Sun

and

5 (more) short takeaways from Poh-Sun

1 - Arguably the aim (outcome) of any learning session is to be able to THINK about (have a conversation), DO, and have a FEEL (opinion) about something, at the end of the educational and training process. Or gain Knowledge, Skills, and (form or change) Attitudes (KSA).

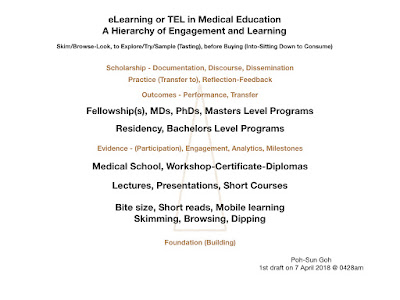

For this topic, when starting out, the material and terminology will feel unfamiliar at the beginning. With further reading, review of more material, repeatedly coming back to the topic, discussing and working through the ideas with other participants, colleagues and collaborators; starting and continuing to USE these ideas, you will progressively increase insight, understanding and skill in this area. Keep going. One step at a time. Iteratively. The more one thinks about, and uses ideas and strategies, the more one becomes familiar with, and confident in a topic, or area of professional practice.

2 - Start with the your goal or objective as a teacher or educator. What is your learning objective(s)? How will you know this is (being) achieved? What data, evidence, and observations inform you (that this has been accomplished)?

3 - Increasingly education, learning and training will be technology mediated, technology facilitated, and technology enhanced. Take advantage of your delivery and engagement platform and process to gather this data and evidence. In real time. To inform, and further customise your educational offering. Similar to what you are familiar with in a traditional classroom and training setting.

4 - Customisation and personalisation (personal learning) may simply require offering a "buffet" of (digital) content, and training scenarios, to suit individual preference and choice, for a specific purpose, at a specific time. Or use a "well stocked" digital repository for instructor facilitated, and specifically curated teaching and learning.

5 - Technology enhanced Learning (TeL) makes what we teach with, and assess on visible, accessible, and assessable. We can "show what we teach with, and assess on". For students, trainees, peers, colleagues, administrators. In fact all potential stakeholders. To make this (data) available as evidence of scholarly activity, and educational scholarship (the Scholarship of Teaching and Learning, or SOTL).

Chan, T., Sebok-Syer, S., Thoma, B., Wise, A., Sherbino, J. and Pusic, M. Learning Analytics in Medical Education Assessment: The Past, The Present and The Future. Education and Training, April 2018. https://onlinelibrary.wiley.com/doi/abs/10.1002/aet2.10087

Matt M. Cirigliano, Charlie Guthrie, Martin V. Pusic, Anna T. Cianciolo, Jennifer E. Lim-Dunham, Anderson Spickard III & Valerie Terry (2017) “Yes, and …” Exploring the Future of Learning Analytics in Medical Education, Teaching and Learning in Medicine, 29:4, 368-372, DOI: 10.1080/10401334.2017.1384731

Goh P, Learning Analytics in Medical Education , MedEdPublish, 2017, 6, [2], 5, doi:https://doi.org/10.15694/mep.2017.000067

Norman, G. (2012). Medical education: past, present and future. Perspectives on Medical Education, 1(1), 6–14. http://doi.org/10.1007/s40037-012-0002-7

Billy Tak Ming Wong, (2017) "Learning analytics in higher education: an analysis of case studies", Asian Association of Open Universities Journal, Vol. 12 Issue: 1, pp.21-40, https://doi.org/10.1108/AAOUJ-01-2017-0009

______________

above from

above from

Goh, P.S. Learning Analytics in Medical Education. MedEdPublish. 2017 Apr; 6(2), Paper No:5. Epub 2017 Apr 4. https://doi.org/10.15694/mep.2017.000067

above from

above from

above from

above abstract of paper accepted for presentation at upcoming 50th JSME meeting, 3 August 2018

Norman, G. (2012). Medical education: past, present and future. Perspectives on Medical Education, 1(1), 6–14. http://doi.org/10.1007/s40037-012-0002-7

"Understanding basic theory using a few illustrative examples. Mastering a topic by exposure to and experience with many examples

Typical examples or real-life scenarios can be used to illustrate theory, and help students understand fundamental principles. Mastering a topic usually requires exposure to and experience with many examples, both typical and atypical, common to uncommon including subtle manifestations of a phenomenon. The traditional method of doing this is via a long apprenticeship, or many years of practice with feedback and experience. A digital collection of educational scenarios and cases can support and potentially shorten this educational and training process. Particularly if a systematic attempt is made to collect and curate a comprehensive collection of all possible educational scenarios and case-based examples, across the whole spectrum of professional practice. Online access to key elements, parts of and whole sections of these learning cases; used by students with guidance by instructors under a deliberate practice and mastery training framework, can potentially accelerate the educational process, and deepen learning."

above from

Goh, P.S. A series of reflections on eLearning, traditional and blended learning. MedEdPublish. 2016 Oct; 5(3), Paper No:19. Epub 2016 Oct 14. http://dx.doi.org/10.15694/mep.2016.000105

Goh P, Learning Analytics in Medical Education , MedEdPublish, 2017, 6, [2], 5, doi:https://doi.org/10.15694/mep.2017.000067

Matt M. Cirigliano, Charlie Guthrie, Martin V. Pusic, Anna T. Cianciolo, Jennifer E. Lim-Dunham, Anderson Spickard III & Valerie Terry (2017) “Yes, and …” Exploring the Future of Learning Analytics in Medical Education, Teaching and Learning in Medicine, 29:4, 368-372, DOI: 10.1080/10401334.2017.1384731

Chan, T., Sebok-Syer, S., Thoma, B., Wise, A., Sherbino, J. and Pusic, M. Learning Analytics in Medical Education Assessment: The Past, The Present and The Future. Education and Training, April 2018. https://onlinelibrary.wiley.com/doi/abs/10.1002/aet2.10087

see also below

see also

and

https://www.iss.nus.edu.sg/graduate-programmes/programme/detail/master-of-technology-in-enterprise-business-analytics

http://executive-education.nus.edu/programmes/31-leading-with-big-data-analytics-machine-learning/#

http://executive-education.nus.edu/programmes/31-leading-with-big-data-analytics-machine-learning/#

https://www.cnbc.com/2018/05/09/zuckerberg-invests-in-blockchain-to-keep-facebook-relevant.html

https://www.wired.com/story/whats-the-deal-with-facebook-and-the-blockchain/

http://fortune.com/2018/05/09/facebook-blockchain-team/

https://www.theverge.com/2018/5/8/17332894/facebook-blockchain-group-employee-reshuffle-restructure-david-marcus-kevin-weil

https://www.cnet.com/news/facebook-is-reportedly-starting-a-blockchain-team/

https://e27.co/blockchain-future-data-privacy-20180525/

Blockchain and individuals’ control over personal data in European data protection law (by Roberta Filippone, Thesis for Master of Law, 2017)

"The holy grail for privacy engineers is to create a semi-permeable membrane between generators and users of data that allows general inferences to be drawn from our digital footprint without personal identification leaking out."

quoted from article below

GDPR is a start, but not enough to protect privacy on its own (by John Thornhill, in the Financial Times, 25 May 2018)

How Facebook's tentacles reach further than you think

(by Joe Miller, on BBC website, 26 May 2017)

https://labs.rs/en/the-human-fabric-of-the-facebook-pyramid/

https://labs.rs/en/browsing-histories/

https://www.cnet.com/news/facebook-reportedly-gave-tech-giants-greater-access-to-users-data/

https://www.cnet.com/news/facebooks-terrible-year-just-got-worse-what-you-need-to-know/

How Facebook's tentacles reach further than you think

(by Joe Miller, on BBC website, 26 May 2017)

https://labs.rs/en/the-human-fabric-of-the-facebook-pyramid/

https://labs.rs/en/browsing-histories/

https://www.cnet.com/news/facebook-reportedly-gave-tech-giants-greater-access-to-users-data/

https://www.cnet.com/news/facebooks-terrible-year-just-got-worse-what-you-need-to-know/